If you haven’t read our blogs on Kubernetes yet, it may be worth going back and refreshing yourself.

In this post I’d like to talk about the emergence of Serverless Container Engines and how they differ from Kubernetes. The concept of Serverless Compute Engines has been around now for roughly a year (April 2019) with the main public cloud providers.

- AWS Fargate

- GCP Cloud Run

- Azure Container Instances

As containers have become the preferred way to package, deploy, and manage cloud applications – the public cloud providers saw an opportunity to allow developers to quickly and easily run their containers without worrying about infrastructure.

Traditionally to run a container in the cloud you would need to either provision a Kubernetes cluster or run the cloud providers container service (e.g. AWS Elastic Container Service). Now, however, you have the added option of Serverless Container Engines, which for example, enable you to run your container on AWS and not worry about the underlying infrastructure – all you have to do is tell AWS how much CPU and memory it needs and away you go. What’s more, you can develop your container in any language you want – as opposed to FaaS such as Lambda, where you are restricted to a small list of languages.

Well, that sounds great, why isn’t everyone using these services?

Well, there are three main barriers to these services at the moment…

- Cost,

- Portability, and

- Limitations

Costs…

Being able to quickly and easily run containers in a managed environment doesn’t come cheap. What cloud providers are doing here is aiming for the market somewhere between entry-level engineers with little experience of the cloud and engineers who don’t want the hassle of managing a containerised platform.

Let’s have a look at the pricing models…

- AWS Fargate pricing is calculated based on the vCPU and memory resources used from the time you start to download your container image until the time it terminates.

- Cloud Run charges you for the resources you use, rounded up to the nearest 100 millisecond (CPU, Memory, Requests, Networking).

- Azure Container Instance pricing is based on the number of vCPU and GBs of memory allocated to the container group. You are charged for each GB and vCPU second that your container group consumes.

So it’s possible to see from these pricing models, that these services are more suited to running predictable workloads for short periods of time. For example, running batch workloads or ad-hoc jobs – if you want to run services like 24/7 web-applications then costs can mount up quickly.

As with all services, there are trade-offs – if you’re able to run a Kubernetes cluster with roughly 80% utilisation on each node then that roughly works out cheaper than Serverless Container Engines plus you get all of the Kubernetes benefits (outlined below).

Portability…

Each of the Serverless Container Engines uses their own proprietary software which with many of their other services tie you into their platform. It is possible to get around this by establishing complex Infrastructure as Code solutions to abstract away from a particular cloud vendors services, however, this comes at a high engineering cost.

When deciding to use a Serverless Container Engine for your business it must be a strategic decision, as with most services consumed such as FaaS, queuing services etc. they will ultimately tie some of your application into a vendors infrastructure. As with all cloud strategic decisions, this will be purely based on your businesses stance on a multi-cloud approach – are you happy to go all-in with a particular vendor OR do you want a more flexible (more costly) approach?

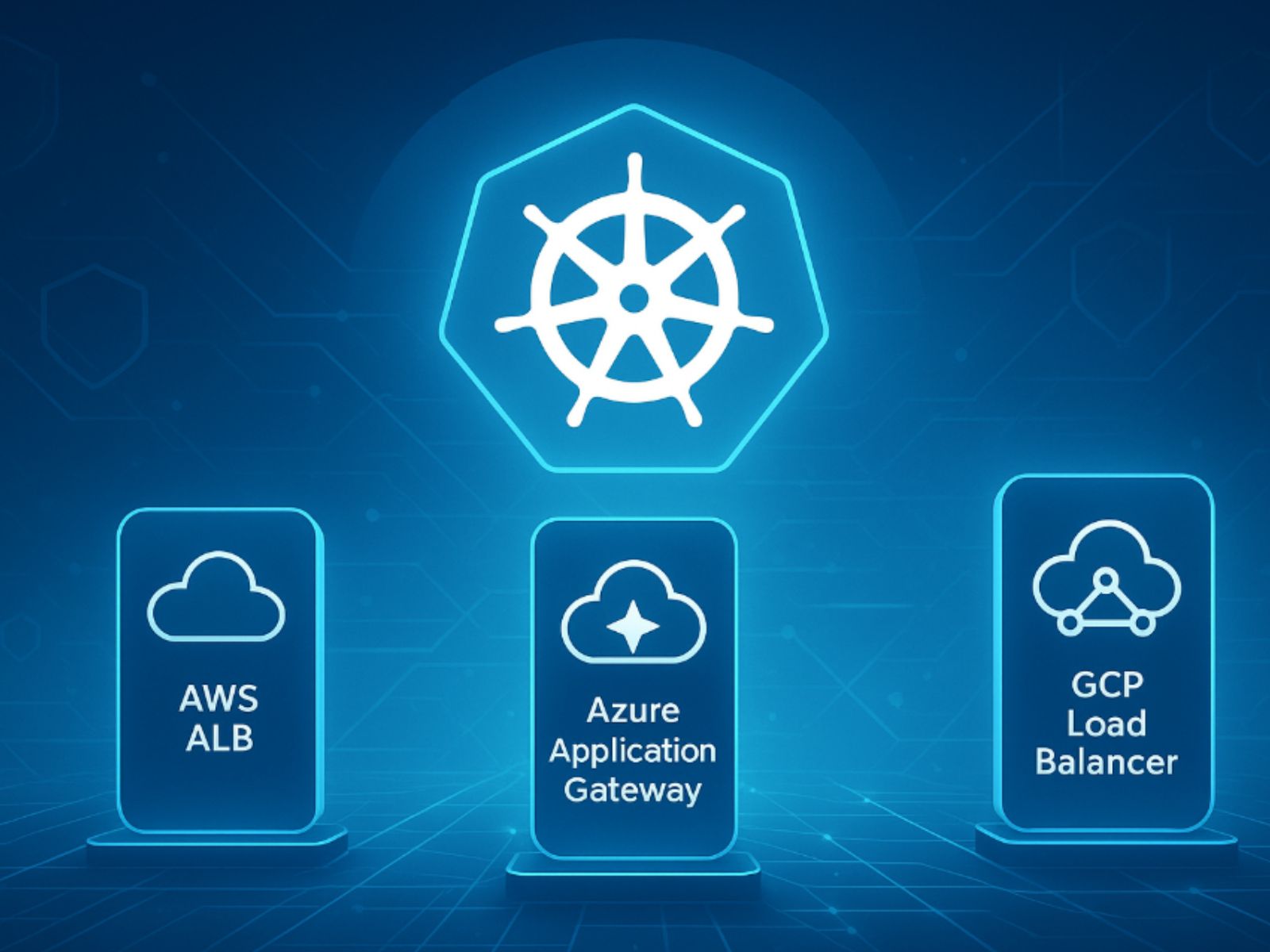

This is a major plus point for technologies like Kubernetes, that are supported across all major public cloud providers. Using Kubernetes across AWS & GCP for example, businesses are able to migrate their applications easily (if designed for multiple services like SQS & PubSub) between vendors.

Limitations…

Serverless Container Engines are a relatively new service amongst Cloud Providers and therefore are limited in their features – especially when compared to container orchestration platforms such as Kubernetes.

For example, Azures Container Instances are relatively quick to get up and running, but there is no automatic scaling and instances are provisioned manually. AWS Fargate takes up to 20 minutes to provide all the underlying infrastructure and unless you want to configure the underlying cluster there is no elastic scaling. GCP Cloud Run is the best of these services, providing auto-scaling and scale-to-zero out of the box, but even that has its limits…for example only being able to spin up single containers.

In Summary…

If you’re looking to experiment with containers, or setup short-lived, predictable workloads then Serverless Container Engines are a great option to get started with. Being able to quickly and easily run containers without requiring detailed cloud knowledge or undergoing the pain of managing the underlying infrastructure is a very attractive prospect.

However, for running complex, distributed systems – they simply don’t stack up. High costs and limitations in container orchestration make it almost impossible to design enterprise-grade systems to run on these services. Features such as automatic rollbacks, network routing, self-healing, health checks and load balancing – needed for managing large scale systems – are only currently available in orchestration platforms such as Kubernetes.

Be sure to check out our Elastic Beanstalk vs Cloudformation.