As a Kubernetes Managed Service Provider, Mobilise has worked with many customers. We design, build and run Kubernetes workloads across a variety of cloud providers. This has traditionally involved building worker nodes on virtual machines. These support the running of application workloads. However, more recent technologies such as AWS’ Fargate have made it more appealing to do away with worker nodes and run containers in a serverless compute engine. Before we jump into EC2 vs Fargate comparison we will look at them individually.

All information is accurate as of the time of publication (May 2021)

What is Fargate?

Fargate is AWS’ serverless compute engine for running containers. It is supported by Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS). So you might hear people saying, “We’re running EKS on Fargate” or “Our ECS platform runs on a mixture of EC2 and Fargate“.

The primary aim of Fargate is to remove the necessary overhead in managing your own EC2 worker nodes. When you deploy a new application to EKS using Fargate, AWS provisions a new serverless compute environment to run your application in. Once deployed, this workload running on Kubernetes appears almost identical to its EC2 equivalent;

- it’s still run as a pod

- you use the same kubectl commands and

- you’re still able to retrieve logging

The main difference to note is when using the kubectl command, a new Fargate node is deployed for each pod. Therefore when you run the ‘kubectl get nodes’ command, you can see a one-to-one relationship between ‘nodes’ and your deployments. Usually, when running on EC2, this would set alarm bells ringing, but it’s simply AWS Fargate’s way of letting you know how many environments it’s provisioned.

What are the main benefits of Fargate?

As previously mentioned – the main benefit is not having to provision EC2 architecture (you still need VPC architecture) and worry about upgrades and patching. But there are further benefits, such as:

- Not having to worry about scaling EC2 nodes – you provision more pods on Fargate, and you have unlimited compute

- Right-Sizing – AWS Fargate matches your workloads requirements with a set of pre-configured CPU & Memory based price points (more on this later)

- Secure isolation by design – individual EKS pods each run in their own dedicated kernel runtime environment and do not share resources with any other pod

What about pricing?

Fargate pricing is simple to calculate using the predefined price points…

The table above outlines what price categories your Kubernetes workload can fit into. You are charged per second for vCPU, Memory and storage (Only if you go above the free 20GB of ephemeral storage).

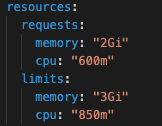

So, for example, let’s say we have a pod being deployed to Kubernetes that has the following configuration:

Here, our pod is looking to have a minimum of 2 GB Memory and 0.6 vCPU (If you don’t specify a request, then Fargate automatically provisions the smallest environment). Fargate calculates which pricing category to establish by analysing the requests and then:

- Rounding the CPU up to the nearest category,

- Adding 256 MB Memory for the required Kubernetes components (kubelet, kube-proxy and containerd).

Therefore our workload would fit into the 1vCPU x 3GB price category. Currently, in Europe (London), pricing is charged as follows (billed per second and rounded up):

per vCPU per hour: $0.04656

per GB per hour: $0.00511

So if we wanted to run our workload 24 hours a day for 4 weeks, our charges would be as follows:

(number of CPU * hourly rate * number of hours * days) + (number of GB Memory * hourly rate * number of hours * days)

(1 x 0.04656 x 24 x 28) + (3 * 0.00511 * 24 * 28) = $41.59

So running our one pod in an ‘always-on’ mode for a month would cost roughly $42. In this example, we appear to have unused Memory and CPU resource because of the rounding up and additional Fargate memory added. Therefore, there could be an exercise here to see if it’s possible to try and squeeze our workload into the pricing category below (0.5vCPU x 2GB), which would cost roughly $22.5 a month.

Okay – so how does this compare to EC2?

This is where the pricing gets a little more complicated and is dependent on several factors. This blog written by AWS does a great job of outlining in detail the comparisons. We’ll try to summarise the main points here…

For comparisons between EKS Fargate and EC2, the following factors need to be taken into account:

- How densely packed your EC2 instances are,

- If you’re taking advantage of EC2 spot (Currently there is no support for AWS EKS Fargate Spot – Only ECS),

- If you’re taking advantage of AWS Savings Plans

Since there is no support for EKS Fargate Spot pricing yet, the spot seems to be the primary cost factor when comparing the services. For example, our 1 workload above, even when squeezed into a lower priced category, costs $22.5 a month. By comparison, an EC2 T3.Medium instance (spec’d with 2vCPU and 4GB Memory) costs just $9.50 a month, and we could potentially run 2 workloads. So you’re getting roughly 4x the CPU and 2x the Memory (Minus OS requirements) for roughly 45% of the Fargate cost.

If you compare on-demand pricing (and I’m sure EKS Fargate Spot will be released soon for comparisons), then the primary factor is how densely packed your EC2 instances are. This means how many resources can you squeeze out of each EC2 instance, leaving no CPU and Memory unused.

Using this comparison, as we approach the EC2 resource limits on an instance (vCPU and Memory), EC2 becomes the cheaper option – very roughly, this must be 80% + for both vCPU and Memory. This is usually only achievable using predictable, steady workloads. So effort must be made to ensure that workloads are scheduled effectively to maximise EC2 resource.

Both EC2 and Fargate workloads can be made even cheaper by applying AWS Savings Plans.

AWS Fargate sounds great! Are there any drawbacks?

AWS Fargate was initially deployed in November 2017, and whilst there have been many improvements, there are still some fundamental limitations when it comes to combining EKS with Fargate…

- Without EKS Fargate Spot, EC2 is cheaper.

- Daemonsets are not supported on Fargate. This means you will need to re-engineer solutions (such as pre-made HELM charts) to use sidecars in your containers instead.

- Privileged containers are not supported (although this is generally best practice)

- GPUs are currently not available in Fargate.

- Only Private Subnets are supported (generally best practice)

- AWS Container Insights is currently unsupported on EKS Fargate

So which solution do I choose? Fargate vs EC2?

Well, it’s not an easy answer – and at the moment, it comes down specifically to your use cases. Also to bear in mind is, it’s not an EC2 vs Fargate model. You can run both side-by-side – letting most workloads be deployed using Fargate and the more ‘needy’ workloads deployed on EC2. Both sets of workloads will appear identical when deployed to the same EKS cluster. The user doesn’t need to know which is deployed where.

If you’re not too comfortable with EKS and managing and upgrading your own EC2 instances (EKS has made this much simpler with managed node groups), then Fargate could be the right solution for you – it offers minimal overhead in terms of operational support, however as it is a type of managed service it will always be a little more expensive to run.

That being said, if you’re looking for supported solutions that will just run on EKS, such as pre-built HELM charts – you may need to do some re-engineering of those charts to get them to run on EKS and get around its limitations (Daemonsets).

If you’re comfortable deploying EC2 worker nodes, are comfortable using EC2 spot and have all the infrastructure as code established. There probably isn’t enough reason to migrate to Fargate yet (until EKS Fargate Spot is supported).

The best solution from now on is to probably try it for yourself on an existing EKS cluster by deploying a Fargate Profile targeting a new namespace and deploying new workloads (that don’t need Daemonsets etc.). Monitor how the workloads perform. Track your billing and day to day operational experience with both solutions and decide based on cost. Enjoy the ease of use and compatibility.

Looking for help with your Kubernetes deployment? Email: info@mobilise.cloud.